Lies, Damned Lies, and Benchmarks Part 1: All About that Base[line]

I’ll open this post with an explanation of the title of this series for those who may not be familiar with the famous quote on which it is based. I’ll be looking at some benchmarks in this series and basically poking holes in them. Let me be clear, I don’t mean to imply that anyone is actually lying in their benchmarks. The important thing to remember about benchmarks is that they’re just numbers. And if you change enough variables, you can almost always come up with a number that serves to reinforce your position. I take care when I do benchmarks to ensure they are both realistic and fair, and I reserve the right to point out when other benchmarks are contrived or disingenuous. But in the end, I don’t expect you to take my word for it either. You should always test for yourself. I aim to give details to back up my numbers so that you can re-create them or explain how they came to be, but that doesn’t mean you shouldn’t question them.

The reason for this series is that I recently became aware of another image scaling component that promises to deliver superior quality and performance over System.Drawing/GDI+ for server-side applications (hey, that’s my line!). I had intended to do all of my benchmarking against my own reference GDI+ resizer so that I could be sure I was doing a like-for-like comparison where possible and because GDI+ was the only real competition I had. In cases where MagicScaler took advantage of a shortcut that wasn’t available in GDI+ or did some additional processing for quality that GDI+ couldn’t match, I’d point that out. But I never got around to doing those detailed benchmarks, and it’s just as well, because now I have something much more interesting to compare to.

The benchmarks in question come from the documentation for the FastScaling plugin from the ImageResizer library. I previously looked at ImageResizer when discussing optimization of DrawImage() and used it as an example of how performance can suffer if the GDI+ settings are not chosen carefully. That the FastScaling plugin is part of the same project is quite interesting because they make some truly impressive performance claims, especially given the poor performance of their GDI+ implementation. I’ll start by quoting from the page linked above.

Unlike DrawImage, [FastScaling] uses orthogonal/separable resampling, and requires less of the CPU cache. On a 4-core laptop, this can translate into a 16-30X increase in throughput when benchmarked against DrawImage for photos from your average 16MP pocket camera. Even when executed on a single-thread, this can mean a 5-12X performance advantage. On a Azure D14 instance, FastScaling has been measured to be 43x faster).

I’ll address the first sentence of that quote later. For now, I’ll focus on the meat of it: the numbers.

There are lot of numbers crammed in there, and to be quite honest they gave me a bit of a scare at first glance, as my own benchmarking of MagicScaler had shown it to peak at around 10x the performance of GDI+ on a single thread. And the performance gains I achieved required some techniques that aren’t even available in GDI+, like DCT-domain scaling in the JPEG decoder and planar processing of the image. So this must be one fast scaler indeed. The documentation page also explains that the plugin requires the Visual C++ redistributable package, and it comes in platform-specific binaries in the Nuget packages. Surely I wouldn’t be able to match the performance of carefully-tuned C++ (and possibly assembly?) code with my C# implementation, no matter how awesome the JIT. It’s a good thing I optimized the entire pipeline, or this would be a non-starter. But there’s plenty that looks fishy in those numbers, so I wasn’t nervous for too long. And I wasn’t about to accept those benchmarks without testing them for myself.

Fortunately, I just happen to be using a 4-core laptop right now and have some nice 16MP JPEGs lying around my office, so we can put those numbers to the test. As for the Azure D14 (16 Xeon cores / 112GB RAM) instance, I’m not even going to bother with that. First of all, either the resizers scale* well with extra processors, or they don’t. We can see that as well with 4 cores as we can with 16. Secondly, I don’t know too many websites that run on that kind of hardware. That’s a beefy box for a web server, and we usually scale web servers out, not up. I’d argue that my quad-core laptop is much more representative of a typical web server than a D14 is. Plus I’m lazy and don’t want to set one up. So there.

* It gets really difficult to disambiguate between performance scalability and image scaling in the same topic. I trust my audience is astute enough to know which ‘scale’ I mean given the context, so I won’t bother.

Then there’s this:

Will you always see benefits this drastic? No. For tiny images that can fit - in their entirety - on your CPU cache (say 300x300), the gain is small - 25% on a single thread, or 0.8 x core count for a throughput increase.

If you have a large number of cores, you might see much larger increases in throughput.

Including the jpeg encoding and decoding cost (which FastScaling does not address), enabling FastScaling will translate into roughly a 4.5-9.6X increase in overall performance for 16MP images.

Ah, that’s more like it… a touch of realism. So the first set of numbers didn’t include decoding or encoding, which makes them fairly pointless. Decoding and encoding are part of the process, and they can’t rightly be excluded just to make the numbers bigger. We’re talking about components designed for server-side use, so decoding the entire image before processing is a bad practice. Keep in mind, a decoded 16MP RGB image would occupy 48MB of memory. If you’re dedicating that much memory to serving a single image request, you’re doing it wrong. Maybe we have different definitions of scalability, but that does not compute for me. The real-world numbers are the only ones that matter here, but they’re not the ones that are bolded.

There’s one more clue in that quote as to the nature of the performance numbers given. They’re very much dependent on scaling up to more than one core. That explains the D14. More cores means a bigger number. The reason for that is simple: DrawImage() is non-reentrant, so no matter how big your box, it will only ever use one core per process. Removing that limitation is an instant performance win since you can now scale up. Of course, there’s a reason why DrawImage() is implemented that way, and I’ll show you what that might be in the next post. It’s worth building something that removes that limitation, but only if you address the underlying reasons for that limitation (hint: they didn’t).

One final quote before we move on:

FastScaling can make a range of adjustments to favor speed or quality on very large images. These typically set default values for the filter type/size and for averaging optimizations.

Averaging optimization: If you're downscaling an image to 1/20th of its size, FastScaling will use an averaging filter to scale it to 1/6th, then scale the remaining 1/3.333 using a high-quality filter. Since no filters use a window larger than ~3x the scale filter, this does not measurably affect quality. You can make this optimization more agressive [sic] by increasing the speed value, or reduce it by specifying a negative value.

Ok, so that part about ‘averaging optimization’ is referring to a technique I also employ in MagicScaler. It’s a fairly common practice, and as I mentioned in a previous post, it would be possible to do this with DrawImage() as well. In fact, I might add it to my GDI+ resizer just for grins. It does measurably affect quality, as we’ll see later, but it’s a tradeoff that is acceptable sometimes.

The less clear part of that quote is the ‘range of adjustments to favor speed or quality’. It certainly looks like the numbers given are the result of some of these adjustments and shortcuts, but there are no specifics. The graphs shown in the page have labels for ‘speed prioritized’ and ‘quality optimized’, but it’s not clear how much quality was sacrificed in order to bump up the performance numbers, nor does there seem to be a speed comparison with identical quality to DrawImage(). That’s not cool if you ask me. Relative performance is only meaningful if you understand the baseline, and too many variables were changed for the numbers to be comparable.

To be fair, it does appear the source code for the benchmark program is available on github, but I don’t really think it’s right to put all the big numbers and pretty graphs on the page and then expect someone to dig into your source code to figure out just how many shortcuts you took to get those numbers. The numbers should reflect real-world scenarios, and where quality differs, that should be explicitly spelled out or better yet, shown. It’s totally cool to trade quality for performance, but let’s be clear about it. Those are the rules I’ll be following in this series.

/rant.

Evening the Playing Field

With that last point about image quality in mind, I figured it would be best to start off by measuring baseline performance between GDI+ and FastScaling at the same quality level. If, as that opening quote implied, FastScaling is implemented in a cleverer way than DrawImage(), it should win any head-to-head comparison by a significant margin, without having to sacrifice quality to do it. We’ll be verifying that.

The first step in establishing that baseline will be examining the resampling algorithms offered by FastScaling so we can match them up with their equivalents in GDI+ (and MagicScaler). Ensuring that we’re using the same algorithms/parameters means we’ll know we’re doing the same work and getting the same output quality.

Looking through the docs, I see FastScaling has a long list of supported resampling filters, so I won’t list them all here. It appears they’re using standard names for some of the filters, and some appear to be made-up variants of those standards. Some, I’ve never heard of. That makes comparisons tricky, but we’ve seen before that ResampleScope can reveal much about the implementations.

I’ll point out now that ImageResizer and FastScaling are open source, so I could just dig into the source and find out how they’re actually implemented, but I won’t be doing that. There is much overlap between those projects and mine, so I prefer to remain ignorant of their implementations. For that reason, I’ll stick with the documentation available on the website.

Fortunately -- or unfortunately, depending how many comparisons you wanted to see – there appears to be very little overlap in the capabilities of GDI+ and FastScaling. We know from my earlier experimentation with the GDI+ InterpolationMode values that there are only two of them that are actually suitable for large-ratio downscaling, and only one of those appears to match up with the filters included in FastScaling.

From the docs:

To mimic DrawImage precisely, use Cubic

Ok.

I’ll assume they mean DrawImage() using the HighQualityBicubic interpolation mode since that’s the default in ImageResizer. We saw in my review of the GDI+ InterpolationMode values that the HighQualityBicubic is actually adaptive depending on resize ratio. I doubt they did actually mimic that ‘precisely’, but we’ll see what the graphs say.

In addition to specifying the algorithm/filter, I’ll also need to explicitly disable the averaging shortcut (and possibly other shortcuts). Those might not come into play now since ResampleScope uses small images anyway, but I’ll use this just to be safe and to be consistent with the next few tests.

&down.speed = -2..4 (default 0) -2 disables averaging optimizations.I’m using FastScaling 4.0.5 x64, freshly installed from Nuget, and I ended up with the following options for my tests:

width={w}&height={h}&mode=stretch&format=png&fastscale=true&down.speed=-2&down.filter=cubic

The ‘mode=stretch’ is necessary for now because ResampleScope works with images resized on a single dimension. Let’s try those settings and see what we get. First I resize down by 2px.

This looks mostly like a Cardinal (0, 1) Cubic, which means it’s not a match for GDI+ at all at this size (it’s not until a much larger downscale ratio that GDI+ uses a standard cubic). But there’s something strange going on with the top of the curve. It’s slightly flattened, and it pokes up slightly above 1. Let’s compare that to MagicScaler.

Yep, it’s not quite right. This is not a great start, but I guess we’ll call it close enough for now. Moving on to a 50% shrink…

Here, the shape of the curve is even more wrong, with the ends jumping up to 0 prematurely. This will lead to a fuzzier image, as it’s the negative lobes that give this filter its sharpening effect. Contrast that with the curve from MagicScaler, which remains the same regardless of resize ratio.

And finally, we’ll do a shrink to 25%. At this ratio, GDI+ does use a Cardinal (0, 1) Cubic, so if it gets this one right, it’s at least a match in some cases.

Nope. It’s even worse. Here it is compared with the GDI+ curve. Note again that the ‘arms’ in the correct implementation go smoothly (well, as smoothly as this graph can show) up to the origin line.

What’s Wrong with This Picture?

Ok, so the graphs don’t match expectations, but what does this mean for actual image quality? Fear not, I have visual comparisons to go with those mathematical ones. I have a 6000x4000 reference image I’ve been using for a lot of my testing. I like it for a few reasons:

- At 24MP, it’s among the biggest images you’ll find in the wild, but not unrealistically big. Most of the new mid-to-high-end DSLRs have standardized around 24MP in their last generation or two.

- The bigness of the image exaggerates the impact of the scaler performance. This is the same reason the FastScaling benchmarks used 16MP images, I assume. I’m just kicking it up a notch for now. Bam!

- It’s a human face. Humans are very attuned to spotting differences in faces, so even without a mathematical comparison, it’s usually easy to spot differences in quality or sharpness

- In order to test these components thoroughly, we need to be able to experiment with images in different container formats and pixel formats. I happen to have 14 variants of this image saved every which-way.

- Get it? Which-way? It’s a witch. Witches are magic. My thing is called MagicScaler. I’ll see myself out…

I’ve applied the settings used above and resized the original JPEG version of the 6000x4000 reference image to a 400x267 PNG in my test/benchmarking harness. The reason I output PNG at this stage instead of JPEG is that I want to compare the pixels output from each scaler, and JPEG encoding is lossy, meaning it may amplify or cancel out small differences. We’ll switch to JPEG output once we’ve normalized the settings and verified the outputs are the same. Here are the results of my first trial:

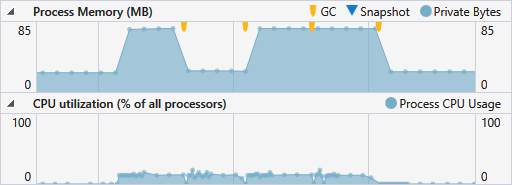

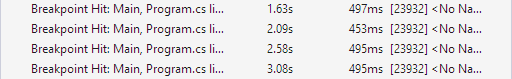

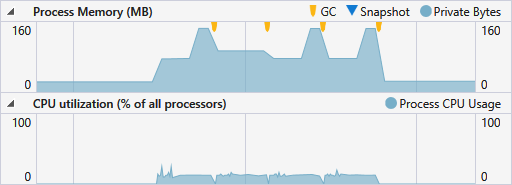

First, let me explain my test harness. The four images shown are the results of a single warm-up run for each test. Following the warm-up, I run each test 10 times serially and display the average and standard deviation of those 10 runs. Next I run 4 tests in parallel and show their average, standard deviation, and total elapsed time. Finally, I do the same for 8 in parallel. I’m running these on my dev laptop, which is a quad-core i7 with hyperthreading enabled. 8 threads is the max I can theoretically actually run simultaneously, so that’s as far as I go with my parallel testing. It should be good enough to give you an idea how each scaler… erm… scales… performance-wise. The visual results are kind of a sanity check to make sure the numbers reflect the work I thought I was doing in the test.

Which brings me to the visual results of this run. We could talk about the performance numbers now, but I think it’s clear from the visual results that the work done was not the same for all cases, so lets hold off on that. The WIC result looks pretty bad to me, with both aliasing and blurring. I’m running Windows Server 2012 R2 on this machine, so that’s actually the best we can do with WIC quality-wise. I’ll leave it there as a reference for just what kind of performance you can get if you’re willing to sacrifice quality for speed. We’ll focus on matching up the quality on the remaining three images, with the GDI+ one as the reference.

The GDI+ and MagicScaler results look the same to me, so I’ll check them in Beyond Compare’s picture compare tool to see how close they are. If you missed my last use of this tool, I have it set with a tolerance of 1, meaning pixels shown in grey are identical between the images, pixels in blue differ by 1 (out of 256), and pixels that differ by more than 1 are red.

Those are darn-near identical, and certainly close enough that you can’t see a difference with your human eyes. The only red pixels are near the edge, where GDI+’s weird ‘mirror’ treatment differs from my more normal ‘extend’ treatment of edge pixels. Considering the difference in implementations, I’ll call these a match visually. Note that for this test, I had all optimizations that would affect image quality disabled in MagicScaler. I also disabled its auto-sharpening. Again, the idea is to establish a baseline where all the components are doing the same work. Only then can we compare them fairly.

Now let’s see how the FastScaling output from that run compares to GDI+. Remember, the docs said its Cubic mimics DrawImage() ‘precisely’.

Nope. The graphs told us it would be blurry, and I could tell just by looking at it that it was significantly more blurry (even more so than the WIC output). There’s the proof. So it really doesn’t matter how fast this was. It was wrong. As in not what I expected and not what I asked for.

At this point I was about ready to declare the FastScaling implementation ‘busted’ and give up, but I had one more look at the docs and spotted something that held promise:

&down.window=0.5..3Default 1..3, depends on filter. This describes the support window (input pixel set) size relative to the output pixel's corresponding input area. Values of 1 will involve only corresponding pixels. Values of 2 will involve the corresponding area, plus half again on each side. Values of three will triple the number of input pixels.

This actually explains the problems we saw in the graphs. It appears the sample window is being set incorrectly initially, and it’s possibly being changed relative to the resize ratio. That’s why the ‘arms’ were cut off on those graphs. You see, the correct sample window for this cubic function is 4. That’s the width of the correct curves in the graphs above. But 4 isn’t even listed in the valid range of values for this option… I figured I’d try the max value allowed and added &down.window=3 to my config for ImageResizer. The image was still blurry, as one might expect. Next I went out on a limb and tried plugging in the correct value of 4:

Et voilà! Now the WIC image is the only one that looks bad. I’ll compare the FastScaling output to GDI+ again in Beyond Compare just to be sure my eyes don’t deceive me.

Much better. This one also shows a few red pixels around the edges, but again, the GDI+ implementation is weird, so I’ll call this a match.

Quality Isn’t Cheap

Note that in fixing the image quality, the average serial processing time for FastScaling went up from 301ms to 384ms, a 27% increase. Initially, it was cheating by sampling fewer pixels than it should, and we wouldn’t have caught it without comparing visually. It’s a bit troubling that I had to set the window size to an undocumented value to get the correct visual result, but hopefully that’s just a bug and not an intentional cheat.

Before we look at the rest of the numbers, we need to make one more small change. Remember, we were using PNG output, and we don’t want to do that for anything other than visual comparisons. The PNG encoder is much slower than the JPEG one when processing a large or complex image, so it hides some of the scaler performance differences. Plus it’s not representative of what we’d do in the real world. Now that we know the scalers are producing the same quality results, I’ll re-run the tests with JPEG output (quality 90).

There we go. Notice the numbers didn’t change a ton, but they all went down. It basically amounted to a 7-8ms difference in the serial runs. Where that really shows up, though, is in the WIC numbers. That 8ms difference represented a 22% speed-up in the WIC resizer. Seriously, that thing is fast.

I have just a few notes on the numbers at this point:

You may have noticed I’ve only been quoting the numbers from the serial portion of the tests. There are two reasons for that.

- We can only get a fair comparison with GDI+ on one thread since we know it’s non-reentrant. We’re still establishing a baseline at this point.

- My processor has Intel’s Turbo Boost and Hyper-Threading features enabled, so the performance on multiple threads will always be lower per thread than when only one is working at a time. The serial test represents the true best time for each scaler.

The parallel results are important for scalability, but in the interest of keeping the number of variables to a minimum, the serial tests are the most controlled and easily comparable.

Now that we’ve normalized the quality, FastScaling is very close to GDI+ in single-threaded performance. Its advantage is now down to less than 8%. That’s certainly nowhere near the 5-12x improvement we were teased with in the beginning. It does perform better running on multiple threads, but we’ll see later why you probably don’t want it doing that.

This test was mostly about comparing FastScaling and GDI+ on even terms, but check out those numbers on MagicScaler. Producing the same quality output, it’s significantly faster than both of the others. And we haven’t even opened up our bag of tricks yet. We’ll do that once we start enabling some of the FastScaling shortcuts, to keep it fair.

Wrapping up, let’s go back to what I originally said about benchmarks. I set out to show that FastScaling really isn’t that much faster than GDI+, and with a few tweaked variables, I think I’ve done that. It is still faster in this case, but in the next post we’ll see some cases where it’s not. And if you want to talk about big numbers, you can grab some out of this test as well. WIC came in over 53x faster than GDI+ in this last test @ 8 threads. That’s end-to-end processing, compared against an optimized GDI+ implementation. Of course I traded quality for that big number, but so did the benchmarks from the FastScaling page, and my number is bigger. Neener, neener.

In the next post I’ll take a stab at explaining why GDI+ came in last in this benchmark, and I’ll reveal FastScaling’s dirty little secret that doesn’t show up in the timing numbers. Stay tuned…

Oh, and one more thing. I keep typing ‘FastScaler’ instead of ‘FastScaling’, and now I notice I labeled it that way in my benchmark app. Mea culpa. Just in case there was any confusion, I was using the right component. I’ll fix the label going forward, but I don’t feel like redoing any of the benchmarks to correct the images I’ve already done.