Image Scaling with GDI+ Part 5: Push vs Pull and… Image Validation?

Welcome to the final post in my series examining image resizing with DrawImage().

In this final part, I will be covering the concept of image validation in the System.Drawing GDI+ wrappers.

There’s an easily-overlooked call in all the Image factory methods and Bitmap constructors that load images from a file or stream. After loading the image, they all include a call to the virtually undocumented GDI+ function GdipImageForceValidation(). I say virtually undocumented because the only reference I could find for it is this MSDN page. If you read the first paragraph of that page and then glance down at the very bottom of the table below it, you’ll learn two things:

- There is a Flat API for GDI+ that isn’t supported for use directly (you’re supposed to use the C++ class wrappers).

- GdipImageForceValidation() is only available in the Flat API.

I would hazard a guess based on those points that the function in question probably exists solely for use by System.Drawing. But what does it do? The table just says this:

“This function forces validation of the image.”

Well, that’s not particularly informative. Nor is it particularly accurate. I mean, I guess it does do some form of validation, but it’s the way it does it that’s of interest to us. What that function actually does is force GDI+ to materialize the bitmap during load. That can have a profound impact on performance.

Normally, GDI+ (like WIC, WPF, MagicScaler and anything else sensible) uses a ‘pull’ model for its processing pipeline. What that means is when you open an image file, the only thing done at that point is the image header is loaded and validated. It’s not until the pixel data is required (during DrawImage() in our case) that decoding, format conversion, color correction, etc is performed. And those steps are performed only for the pixels we actually consume.

Essentially, System.Drawing breaks that model by default and turns it into a ‘push’ model, where the image is first completely decoded into a bitmap in memory and then pushed through to the next step. That’s incredibly wasteful if, for example, you’re cropping a section out of a large image. Why decode the whole thing and use up all that memory if you’re not even using all the pixels?

In addition, using the default pull mode pipeline allows GDI+ to avoid ever holding the entire decoded bitmap in memory even if you are using all the pixels. It will instead read individual scanlines as they are needed. System.Drawing breaks that by default as well. In order to illustrate this, I wrote a sample program that uses four different methods of opening the same image file and then uses those images as inputs to DrawImage(). In each case, I resized the same 18MP source image to 100x100. The four methods I used are as follows:

- Image.FromStream(filestream, useEmbeddedColorManagement: true, validateImageData: true)

- Image.FromStream(filestream, useEmbeddedColorManagement: true, validateImageData: false)

- Image.FromFile(filename, useEmbeddedColorManagement: true)

- new Bitmap(filestream, useIcm: true)

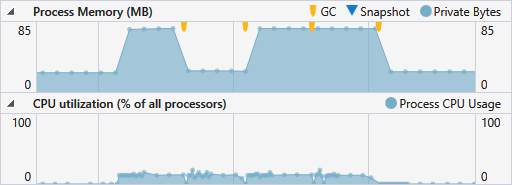

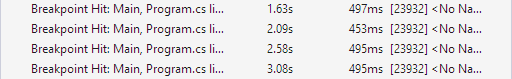

And here is the Visual Studio 2015 Diagnostic Tools graph of the CPU and RAM usage from a test run. I forced a garbage collection after each test to isolate the memory usage from each one.

The area of higher CPU usage (bottom graph) covers the four test runs and the orange GC arrows mark the end of each one. The times before and after show the test app’s baseline memory usage, which was steady at around 23MiB. You can see that during tests 1, 3, and 4, the memory usage spiked up (to a peak of 75MiB in each case) whereas test 2 stayed down near the baseline (24MiB actually). Test 2, of course, is the one that disabled ‘validation’ of the image. In addition to the memory savings, the test without validation also ran more quickly.

Notice that test 2 took 42ms less than the best of the others. This was an expensive test in that the source image was high resolution and had an embedded color profile. Due to the sampling rate of the graph, it was easier to show the correlation between each test run and its memory usage with a slower operation. The corresponding improvement in performance when skipping validation is potentially more significant on a more typical operation. Here it amounted to 9%, but it’s not uncommon to see an improvement closer to 15% with high-res images.

The source image for these tests was a 5184x3456 JPEG. Just doing a quick bit of math, 5184 * 3456 * 3 (bytes per pixel) / 1024 / 1024 = 51.25MiB – the exact difference in memory usage between the tests with validation and the test without. 42ms of CPU time is nothing to sneeze at, but it’s not a huge deal. The extra 51MiB of memory can be pretty significant, though, especially when dealing with a server app. And of course more MPs means more MiBs.

But wait, there’s more!

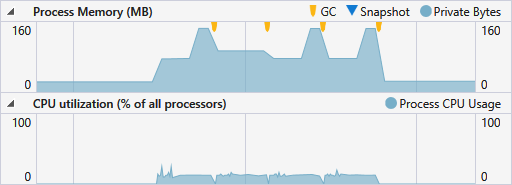

In my first trial, I kept things simple by using the default Graphics settings for my DrawImage() call. Stepping things up and using my recommended settings from Part 3 of this series, the graph looks like this:

Here we see that the memory usage is a lot higher across the board and has some even higher spikes. The difference here goes back to my point about the HighQuality interpolators in GDI+ working only in RGBA mode. More specifically, they work in Premultiplied (or Associated) Alpha mode, aka PARGB. What happened here is that because my input image was a JPEG, it had to be converted from RGB to PARGB for processing. The baseline memory is the same in this trial: 23MiB. We can see the memory move up to 75MiB as soon as the image is loaded in test 1, just as before. It plateaus there while the image is ‘validated’, then it spikes again once DrawImage() starts. That moves it up to 144MiB(!). The reason for the second spike is the PARGB conversion. 5184 * 3456 * 4 / 1024 / 1024 = 68.34MiB. That, plus the buffering required by the scaler accounts for the difference in memory usage. During the 2nd test, the memory usage dips to a constant 93MiB, which is just the baseline plus the ~70MiB for the converted PARGB version and buffering. Again there, we save the 51MiB for the decoded image, so while the memory usage is high, it could be worse. Like in tests 3 and 4, which match test 1 exactly.

As an aside, in case anyone is wondering how I came to the conclusion that DrawImage() converts the source image to PARGB or whether that can be avoided, I’ll explain. The memory spike during the DrawImage() call very clearly correlates to a 32bpp copy of the entire source image being held in memory, as indicated by the math above. That seems to imply that it works in RGBA mode. I wondered whether this conversion always occurred, so I re-saved my test JPEG as an RGBA TIFF and ran the test again. The spikes were still there. But TIFF supports storing an alpha channel in either associated or unassociated forms, and I had tried it with an unassociated alpha channel first. So I re-saved again with associated alpha, and the memory graph profile changed to only include a single spike for each test (to 93MiB). Mystery solved. Note, however, that although the conversion didn’t happen with the PARGB image, the HighQuality interpolation modes do apparently require that the entire image be decoded into memory for processing, which is why all the tests showed the same memory usage. Whether the image was loaded during the ‘validation’ step or at the start of DrawImage(), it was completely held in memory for the duration of each test.

Getting back to the above graph, the more astute among you may have noticed that in test 2, the PARGB copy was held in memory for the entire length of the test, which would lead you to believe that DrawImage() took longer. And you’d be right. When the validation step is performed, the entire decode and color management process occurs before DrawImage() starts, so less time is spent in that method. And while we haven’t discussed it in this series, some of you might be aware that DrawImage() holds a process-wide lock while it does its work. I’ll be talking more about that when I compare GDI+ with MagicScaler.

With that locking business in mind, you might be thinking it’s worth doing anything you can to reduce the amount of time spent inside DrawImage(). There, you’d be wrong (IMHO). Consider a scenario where you have a large number of image resizing requests coming in to a web app simultaneously. If you allow all of them to completely load their corresponding bitmaps before queuing up for their turn at DrawImage(), they could be waiting in that queue for a long time with all that memory held. You’ll potentially have a memory spike that can cause the server to start paging memory or may cause your app pool to cross its memory limit and get recycled. Those things are bad. I would suggest letting them queue a bit longer without tying up any memory. The total processing time will be reduced, and your app will be more stable. In fact, considering that, and seeing what we saw above with regard to the amount of memory consumed by a single resize operation, it seems that GDI+ has a good reason for not allowing you to run multiple DrawImage() operations in parallel. Depending on your workload, the memory requirements could be ridiculous.

So I think I’ve made my point by now that System.Drawing’s ‘validation’ of the image is all-around bad for performance. What can you do about it? Well, be like Test 2. That’s the one (and only one) way to skip the validation step from System.Drawing. Because the validateImageData parameter to Image.FromStream() is not available on any of the Image.FromFile() overloads, I use a FileStream and pass that to Image.FromStream() whenever loading from a file.

“But skipping validation sounds dangerous”, I hear some of you saying…

Actually, I can’t find any evidence to suggest it’s dangerous at all, nor have I ever had a problem with it in the many years I’ve been doing it. I suppose It’s possible that in olden times, the GDI+ image decoders might have been able to cause access violations, buffer overflows, etc when reading corrupted image data. It’s also entirely possible the designers of System.Drawing just went into total overkill mode when working on the safety of the framework.

What I can say for sure is that starting with Windows 7, GDI+ uses WIC internally for decoding/encoding. And I can say that WIC’s built-in decoders are extremely resilient when it comes to corrupted or truncated files. Keep in mind, WIC is used by Windows Explorer and Internet Explorer, so it has to be pretty well bulletproof. So as far as I know, there is absolutely no risk to skipping the image data validation step from System.Drawing. You can read more about Microsoft’s security recommendations for WIC codec developers here. It’s a safe bet their codecs follow their own advice, and GDI+ doesn’t allow you to access any third-party codecs which may be less safe.

In my own testing of a suite of broken image files, the WIC decoders always either silently decoded what they could of the images (filling in the rest with blank pixels) or threw an exception as soon as the header was loaded. What’s more, in the cases where WIC silently processed the corrupted images, GDI+ failed to report any problems in its validation step despite the images being corrupted or incomplete. That’s further evidence it’s not doing anything for you that the underlying WIC decoder isn’t already.

And really, if the validation is actually worth doing, why is GdipImageForceValidation() left out of the supported C++ wrappers?

Anyone?

Bueller?

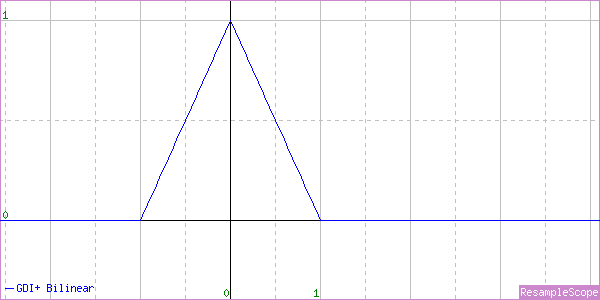

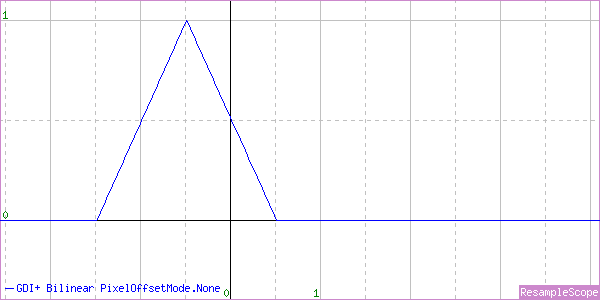

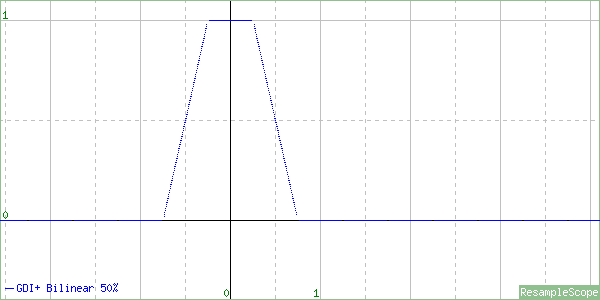

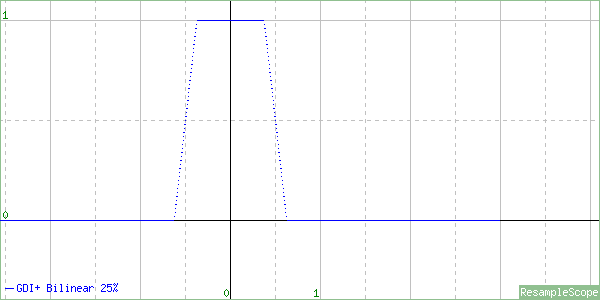

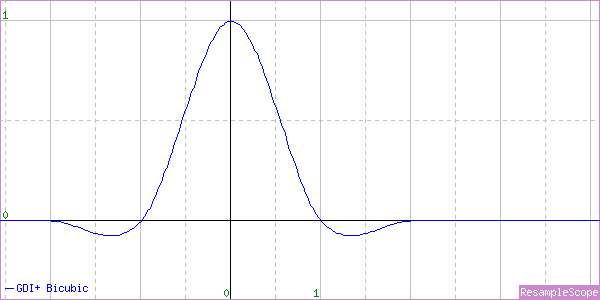

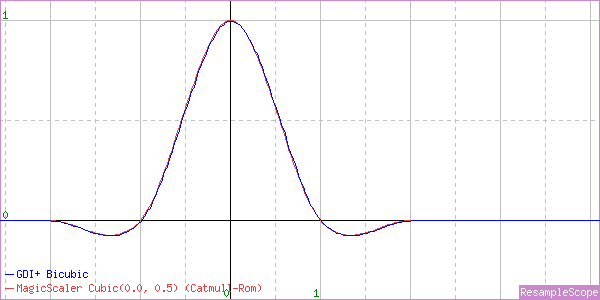

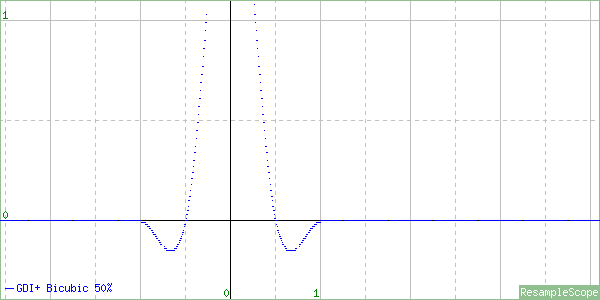

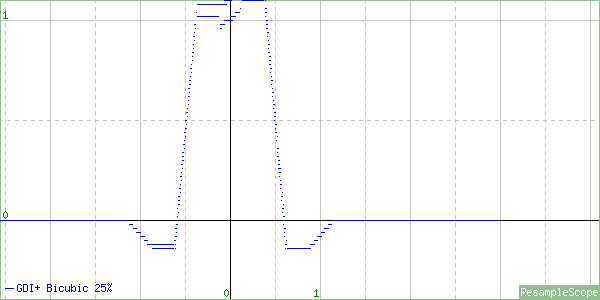

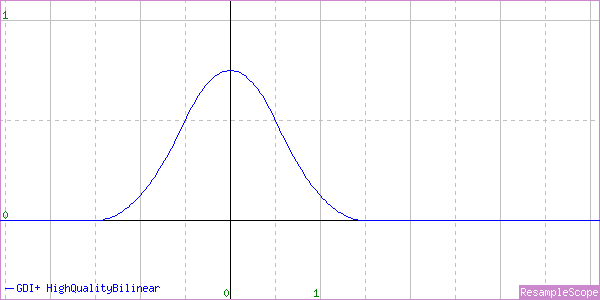

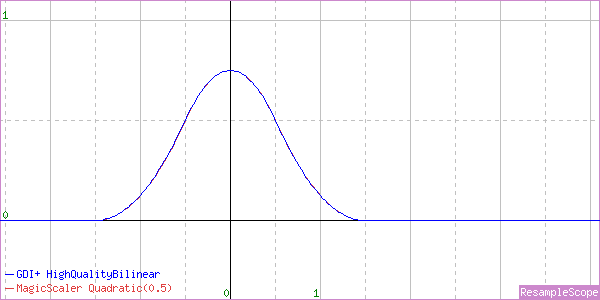

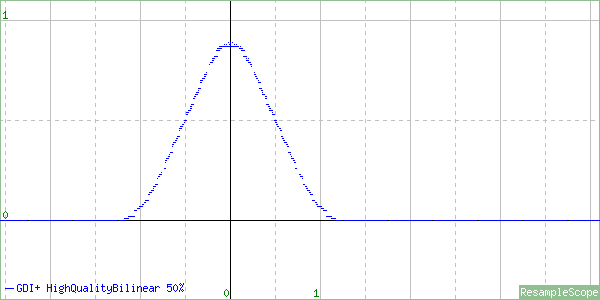

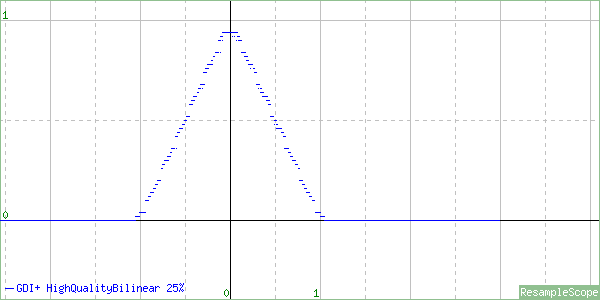

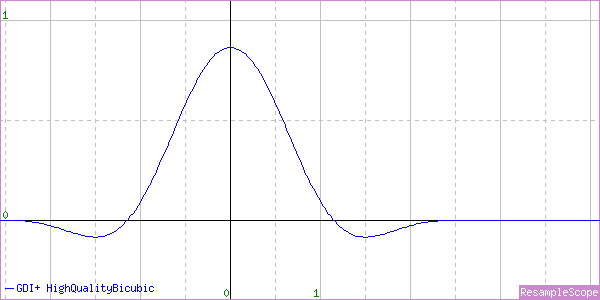

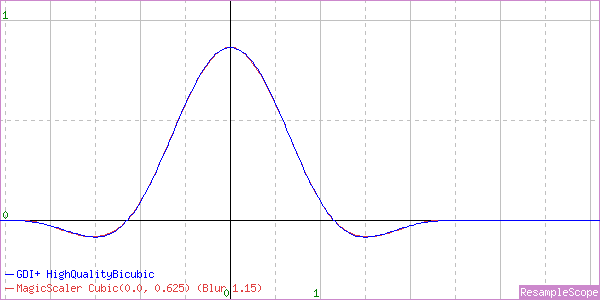

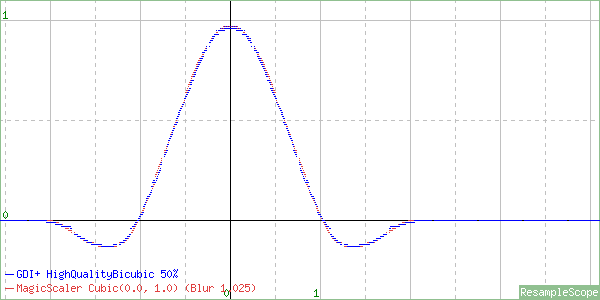

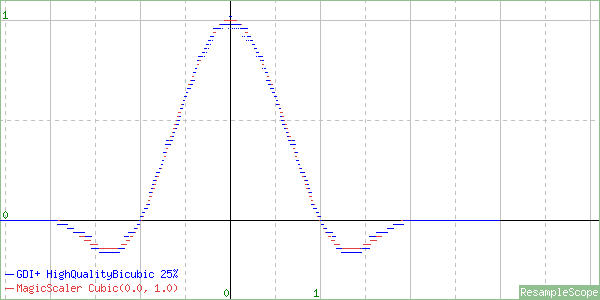

Tune in next time when I’ll turn my copy of ResampleScope loose on the WIC interpolators to see how they do. It’s gonna be fun on a bun.