Fast Hashing with BLAKE2 Part 1: NuGet is a Minefield

Free as in…

Before I get in to the titular topic of this post, I’d like to discuss my motivation for writing it. Free software has become increasingly important in the business world over the last couple of decades, and the industry has adopted phrases like “free as in beer” and “free as in speech” to define what ‘free’ even means.

For those not familiar, “free as in beer” speaks to the acquisition cost of the software. In the old days, closed-source freeware and shareware were common. They were free (of cost) to download and use, but using them was all you could do. You were not free (as in freedom) to see or modify the code. In light of that, it became important to differentiate software that was truly free, in that you can use it in any way you please, modify it, or enhance it. That software is “free as in speech”.

In the Microsoft world, the .NET Framework has always been “free as in beer” – assuming you discounted the Windows license you had to pay for in order to have a place to run it. With .NET Core, .NET finally became “free as in speech”, and it has improved at an unprecedented pace as people use that freedom to give back to the project. That change has accelerated (or at the very least coincided with) the uptake of Free Open Source Software (FOSS) in the corporate world as well, especially among the ‘Microsoft shops’ that typically eschewed open source. And that has led to more conversations about the true cost and true value of FOSS.

When talking about overall cost, another phrase now in common use is “free as in puppy”. That phrase is somewhat less well-defined than the others. To some, it means that adopting free software comes with some responsibility. It has to be cared for on an ongoing basis, or else it may grow unhealthy and eventually die. That’s true to some extent, but I do agree with Jeff’s take on it as well. If a piece of software requires as much maintenance as a puppy, you shouldn’t be using it, free or not.

Another way of looking at it is that the acquisition cost of the software is inconsequential compared to the cost of evaluation, training, integration, testing, and maintenance of said software within a larger system. It doesn’t matter whether you pick up a stray puppy off the street or buy a fancy $1k designer puppy from a breeder; the cost of caring for that puppy over its lifetime will dwarf the acquisition cost. Likewise, in a business environment, whether you pay $10k for a piece of software or get it for free, if you use it long enough, the acquisition cost will become largely irrelevant.

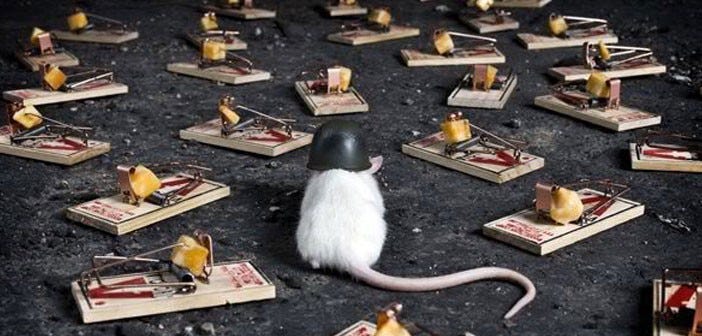

Which brings me to another phrase I saw recently: “free as in mattress”. I think many of us with a corporate development background have learned to view free software in this way. For small bits of functionality (like a simple hashing algorithm), a corporate team often has the choice to build or buy – whether at zero acquisition cost or some higher number. If the team is good, the cost to build can be estimated fairly accurately as can the maintenance cost. So, like a new mattress, it has a known upfront cost and known risks. When you buy (or take for free) a piece of software, you often don’t know what you’re getting into – not unlike a used mattress. Maybe that free mattress looks nice on the outside. But when you’re dealing with a bedbug infestation a few months later, ‘free’ is a much less good deal. Many would prefer to avoid the risk altogether and buy the new mattress every time.

I’ve seen enough bad code offered up in blog posts, CodeProject articles, StackOverflow answers, and SourceForge/GitHub projects to be very wary of all but the largest corporate-sponsored projects. I don’t mean to pick on the people who write that code. It takes courage to publish the code you write for the world to see (and criticize). And it takes generosity to offer up something you worked hard on for others to use, with no benefit (and likely added headaches) to you. But it also takes a lot of trust to bring that mattress into your house – or code into your project. And, of course, as an author of open source projects myself, I do appreciate the irony in having that attitude.

Caveat Implementor

Despite the benefits that come with the larger swing in the direction of embracing FOSS, maybe sometimes it’s good to remember the lessons we’ve learned over the years when it comes to software quality and maintenance cost. I was reminded of that recently when evaluating implementations of the BLAKE2 hashing algorithm.

I had looked at BLAKE2 a few years ago when choosing a hashing algorithm to use for the cache file naming in WebRSize. I use a base-32 encoded 40-bit hash of the settings used to generate an image when naming its cache file. One neat thing about BLAKE2 is that the spec allows for hashes of any length between 1 and 64 bytes, and the hash length is fed into the parameter block that is mixed with the initialization vector, so a 40-bit hash is not just the first 40 bits of the full-length hash; it’s a different value altogether.

Although I wanted to use the BLAKE2 algorithm, Microsoft doesn’t supply one in .NET, and the only NuGet packages available were a random assortment from developers I don’t know or trust. It was a perfect example of a “free as in mattress” problem, if that’s how you tend to view these things. I didn’t want to take the time to evaluate the available libraries properly nor to write my own, so I decided to simply take the first 40 bits of a SHA256 hash instead, using the hash algorithm built in to .NET (which uses CNG on Windows) .

When .NET Core 2.1 RC1 was released a couple of weeks ago, I was trying to come up with a small project I could use to try out the new X86 Intrinsics support. The reference BLAKE2 implementation includes SSE-optimized versions of the algorithms, so I though porting those would let me test out the new functionality while getting the trustworthy BLAKE2 implementation I had wanted. And since I had to set up a reference implementation and test harness for checking correctness and for benchmarking, I decided to go all out and check all the NuGet packages I could find using the same tests/standards. What I found was that the “free as in mattress” view of things is as legit as ever.

BLAKE2 in a Nutshell

BLAKE2 is derived from the BLAKE algorithm, which was one of the leading candidates from the SHA-3 competition. Ultimately, it wasn’t chosen (that honor went to Keccak), but it has some interesting properties that make it useful for general-purpose secure hashing. The short version is, they claim it’s more secure than SHA-2, and the modifications in BLAKE2 make it faster than MD5 when calculated in software. Basically, anywhere people use MD5 today, BLAKE2 is a definite upgrade.

BLAKE2 comes in two flavors: BLAKE2b and BLAKE2s. BLAKE2b produces a 512-bit hash using an internal state made up of 8 64-bit values and is optimized for 64-bit platforms. BLAKE2s uses 8 32-bit values to produce a 256-bit hash so it can be faster on 32-bit platforms. In Part 2 of this post, we’ll see that use of the SSE instruction sets can make BLAKE2b perform nearly equally in 32-bit and 64-bit, but let’s not jump ahead…

The Reference

The designers of BLAKE2 published several reference implementations in a GitHub repo, so that’s a natural place to start.

Among those is a C# implementation (Blake2Sharp), which should be the perfect reference to use for my testing. The only thing that gave me pause was that the code is incomplete. Not all the BLAKE2 functionality is finished. Tree hashing mode, for example, is partially there but commented out. And there are three different versions of the core algorithm implementation, with two of those commented out – both slower than the ‘unrolled’ version that is enabled. It’s also missing the BLAKE2s variant. Bottom line: it looks like a work in progress and hasn’t been updated in years. I decided to include it in my testing but figured I should bring along some backup just to be safe…

The C reference implementation is complete, including both the BLAKE2b and BLAKE2s variants. And there are the aforementioned SSE-optimized versions. I decided to compile the scalar version into a DLL and call it via PInvoke as a baseline performance reference.

Finally, there are simpler and tidier implementations of both variants available in the RFC that describes BLAKE2. Although they’re written in C, it was very easy to port those over to C# to serve as another set of backup references. Those implementations are designed to be simple and correct, with no optimization. The RFC versions omit the optional features like tree mode hashing, but the implementations are less than 200 lines of code each and very easy to follow. My C# conversion is as true to the C reference as possible, including the lack of optimization.

The Tests

With those references chosen (3 for BLAKE2b and 2 for BLAKE2s), I set about methodically testing every NuGet package I could find. My requirements were simple: the implementation had to support the basic features defined in the RFC. That is, keyed and non-keyed hashing with variable digest length from 8 bits up to 256 or 512, as appropriate. I tested the qualifying implementations for speed and correctness.

Benchmarking was performed with BenchmarkDotNet under .NET Core 2.1-rc1 on Windows 10, using the following inputs.

- The ASCII string ‘abc’

- The contents the of sRGB ICC profile that ships with Windows 10 (3.19KiB)

- 10MiB of random data.

I also tested each implementation with other data sizes and with keyed hashes, but for the sake of brevity, I’ll just include the results for the three listed above. The output was a 40-bit hash, and I included a custom column in the BenchmarkDotNet output to allow for a quick check of output correctness. Note that BLAKE2b and BLAKE2s are different algorithms and produce different outputs by design. Test code is published here, and this is my test environment:

BenchmarkDotNet=v0.10.14, OS=Windows 10.0.17134 Intel Xeon CPU E3-1505M v6 3.00GHz, 1 CPU, 8 logical and 4 physical cores Frequency=2929692 Hz, Resolution=341.3328 ns, Timer=TSC .NET Core SDK=2.1.300-rc1-008673 [Host] : .NET Core 2.1.0-rc1 (CoreCLR 4.6.26426.02, CoreFX 4.6.26426.04), 64bit RyuJIT DefaultJob : .NET Core 2.1.0-rc1 (CoreCLR 4.6.26426.02, CoreFX 4.6.26426.04), 64bit RyuJIT

Here’s what I found:

The Good

Of the 8 NuGet packages I found that list BLAKE2 support, only one had an implementation that was complete and correct according to the RFC as well as being fast enough for general-purpose hashing. The winner is blake2s-net

This implementation appears to be a straight conversion of the Blake2Sharp reference code to support the BLAKE2s algorithm, with original credit going to Dustin Sparks.

Here are its benchmark results compared with the 2 references:

3-byte input

Method | Hash | Mean | Error | StdDev | Gen 0 | Allocated |

----------------- |----------- |---------:|---------:|---------:|-------:|----------:|

Blake2sRefNative | FE4D57BA07 | 259.8 ns | 1.444 ns | 1.351 ns | 0.0072 | 32 B |

Blake2sRFC | FE4D57BA07 | 794.8 ns | 4.051 ns | 3.789 ns | 0.0067 | 32 B |

blake2s-net | FE4D57BA07 | 366.0 ns | 2.053 ns | 1.921 ns | 0.1273 | 536 B |3.19KiB input

Method | Hash | Mean | Error | StdDev | Gen 0 | Allocated |

----------------- |----------- |----------:|----------:|----------:|-------:|----------:|

Blake2sRefNative | 62320CA3FC | 9.818 us | 0.0503 us | 0.0446 us | - | 32 B |

Blake2sRFC | 62320CA3FC | 39.240 us | 0.3034 us | 0.2689 us | - | 32 B |

blake2s-net | 62320CA3FC | 7.274 us | 0.0326 us | 0.0305 us | 0.1221 | 536 B |10MiB input

Method | Hash | Mean | Error | StdDev | Allocated |

----------------- |----------- |----------:|----------:|----------:|----------:|

Blake2sRefNative | 6500962DE3 | 30.87 ms | 0.1184 ms | 0.0989 ms | 0 B |

Blake2sRFC | 6500962DE3 | 122.67 ms | 0.5827 ms | 0.5451 ms | 0 B |

blake2s-net | 6500962DE3 | 22.27 ms | 0.1013 ms | 0.0898 ms | 536 B |This is exactly what you’d expect from a version that’s correctly implemented and optimized. The only knock on this package is that it is compiled with a .NET Framework target, so it can’t be used with older .NET Core or .NET Standard projects. It does work, however, with the .NET Framework library support in .NET Core 2.0 and up. And this one only implements the BLAKE2s variant, so for BLAKE2b, you’ll need to look elsewhere.

[Note that in Part 2 of this post, I’ll cover my own optimized BLAKE2s implementation which does better than this one.]

You can also see here that the RFC implementation is, as expected, very slow. It’s correct, but I wouldn’t use it in any real project. Remember that speed is one of the main reasons for choosing BLAKE2 over other hashing algorithms, so a slow implementation makes it rather pointless.

The Bad

I can’t say I was surprised to find that one of the 8 packages contained an incorrect implementation of the BLAKE2 algorithm, but I was surprised to find that it was the one with the highest download count. If you search ‘BLAKE2’ on nuget.org today, the top match will likely be Konscious.Security.Cryptography.Blake2

This appears to be a from-scratch implementation of BLAKE2b based on the RFC but with a mistake that will show up shortly. Let’s jump straight into the benchmark results.

3-byte input

Method | Hash | Mean | Error | StdDev | Gen 0 | Allocated |

----------------- |----------- |-----------:|---------:|---------:|-------:|----------:|

Blake2bRefNative | 44229FC0EF | 330.2 ns | 2.326 ns | 2.176 ns | 0.0072 | 32 B |

Blake2bRFC | 44229FC0EF | 1,134.0 ns | 8.745 ns | 8.180 ns | 0.0057 | 32 B |

Blake2Sharp | 44229FC0EF | 519.0 ns | 3.886 ns | 3.635 ns | 0.2050 | 864 B |

Konscious | 44229FC0EF | 1,524.1 ns | 9.384 ns | 8.318 ns | 0.2213 | 936 B |

3.19KiB input

Method | Hash | Mean | Error | StdDev | Gen 0 | Allocated |

----------------- |----------- |----------:|----------:|----------:|-------:|----------:|

Blake2bRefNative | 61EB59036B | 6.143 us | 0.0276 us | 0.0244 us | - | 32 B |

Blake2bRFC | 61EB59036B | 26.434 us | 0.1139 us | 0.1010 us | - | 32 B |

Blake2Sharp | 61EB59036B | 5.549 us | 0.0295 us | 0.0276 us | 0.1984 | 864 B |

Konscious | 61EB59036B | 20.954 us | 0.1704 us | 0.1510 us | 0.2136 | 936 B |

10MiB input

Method | Hash | Mean | Error | StdDev | Allocated |

----------------- |----------- |---------:|----------:|----------:|----------:|

Blake2bRefNative | 7B6AB409B7 | 18.94 ms | 0.1008 ms | 0.0894 ms | 0 B |

Blake2bRFC | 7B6AB409B7 | 83.18 ms | 0.6921 ms | 0.6135 ms | 0 B |

Blake2Sharp | 7B6AB409B7 | 16.61 ms | 0.1297 ms | 0.1214 ms | 864 B |

Konscious | 1636541AC6 | 63.99 ms | 0.4153 ms | 0.3885 ms | 936 B |

First, I’ll point out that the Blake2Sharp reference implementation does slightly better than the native reference version on all but the tiniest input, just as the blake2s-net conversion from that same base did better than its native reference. And the RFC version, once again, is the slowest.

Check out the Konscious version, though. Not only is it 3-4x slower than the Blake2Sharp implementation, it produced a bad hash on the 10MiB input. It turns out, that implementation has a bug that affects any input that is an even multiple of the [128 byte] block size. At an even 10MiB, that last test input triggered the bug.

I have reported the bug to the owner of that package/project, and it may be fixed by the time you read this. But that may not be a good thing for anyone already using this library. If you generate hashes and then save them somewhere with the intention of validating things against them later, you can’t just ‘fix’ a problem in the hash implementation, because you will invalidate any hashes created with the broken version. And because the hash, by definition, reveals nothing about its input data, there’s no way to identify which hashes are correct and which are incorrect after the fact. You may be better off keeping it broken, bad as that may be.

The Ugly

Sorry, I had to do it.

Although it doesn’t have any logic bugs, there isn’t much else nice I can say about System.Data.HashFunction.Blake2

This looks like another from-scratch implementation. And although it produces good hash values, check out the benchmarks:

3-byte input

Method | Hash | Mean | Error | StdDev | Gen 0 | Allocated |

----------------- |----------- |-----------:|----------:|----------:|-------:|----------:|

Blake2bRFC | 44229FC0EF | 1,154.3 ns | 12.779 ns | 11.954 ns | 0.0057 | 32 B |

Blake2Sharp | 44229FC0EF | 523.7 ns | 4.712 ns | 4.408 ns | 0.2050 | 864 B |

S.D.HashFunction | 44229FC0EF | 2,364.9 ns | 27.715 ns | 25.925 ns | 0.4120 | 1744 B |

3.19KiB input

Method | Hash | Mean | Error | StdDev | Gen 0 | Allocated |

----------------- |----------- |----------:|----------:|----------:|-------:|----------:|

Blake2bRFC | 61EB59036B | 26.745 us | 0.1249 us | 0.1168 us | - | 32 B |

Blake2Sharp | 61EB59036B | 5.682 us | 0.0397 us | 0.0331 us | 0.1984 | 864 B |

S.D.HashFunction | 61EB59036B | 36.869 us | 0.1811 us | 0.1513 us | 2.1973 | 9344 B |

10MiB input

Method | Hash | Mean | Error | StdDev | Gen 0 | Allocated |

----------------- |----------- |----------:|----------:|----------:|----------:|-----------:|

Blake2bRFC | 7B6AB409B7 | 82.62 ms | 0.3159 ms | 0.2800 ms | - | 0 B |

Blake2Sharp | 7B6AB409B7 | 16.59 ms | 0.1275 ms | 0.1193 ms | - | 864 B |

S.D.HashFunction | 7B6AB409B7 | 113.15 ms | 0.3898 ms | 0.3646 ms | 5937.5000 | 24905120 B |

I dropped the native DLL version from this run since we’ve already shown Blake2Sharp is faster, which makes it the proper reference to use going forward.

Notice that this implementation, in addition to being much slower than even the slow RFC version, uses several times more memory than the size of the input data. A hashing function should only read the input and perform computations on it, not make multiple copies of it. I didn’t dig into the code to see what went wrong here, but this is a hidden performance trap waiting to get anyone who dares use this library.

Sadly, I’m sure some people will pick this one from NuGet either because they mistake it for a Microsoft package or simply because they like the naming that looks like the Microsoft packages. There is a new policy in place on NuGet that prevents third-party packages named starting with ‘System.’, but Microsoft is allowing any existing packages to stay put. Beware.

This one also has a sibling package called System.Data.HashFunction.Blake2.Net40Async

I wasn’t able to get that one to work in my benchmark app, although I’ll admit I didn’t try very hard. It appears to be the same basic thing as the one above but with the added trap of a ComputeHashAsync method. Hashing is a CPU-bound operation, so there’s no place for async in it. Trying to run the hash itself asynchronously just adds extra thread context-switching overhead.

If you are receiving data from somewhere asynchronously, simply use a hashing implementation that allows for incremental updates (the BLAKE2 algorithms support this) and update the hash synchronously with each data packet you receive asynchronously.

The Butfor

But for one simple mistake, there would be 2 libraries in the ‘Good’ section. I like the honesty in the readme for Blake2Core

“This is quite literally a copy/paste from BLAKE2 and built into a NuGet package, available here. I needed it in my .Net Core project, and I'm sure other people as well.”

This is an exact copy of the Blake2Sharp reference code, and it would have been exactly as good as my reference copy except that the NuGet package contains a debug build of the DLL, with optimizations disabled. In many cases, there isn’t much difference in performance between Release and Debug builds of .NET code, but for something computation-heavy like hashing, it can make a huge difference.

3-byte input

Method | Hash | Mean | Error | StdDev | Gen 0 | Allocated | ------------ |----------- |-----------:|----------:|----------:|-------:|----------:| Blake2bRFC | 44229FC0EF | 1,134.2 ns | 5.146 ns | 4.814 ns | 0.0057 | 32 B | Blake2Sharp | 44229FC0EF | 524.6 ns | 4.869 ns | 4.316 ns | 0.2050 | 864 B | Blake2Core | 44229FC0EF | 1,877.0 ns | 11.314 ns | 10.583 ns | 0.2041 | 864 B |

3.19KiB input

Method | Hash | Mean | Error | StdDev | Gen 0 | Allocated | ------------ |----------- |----------:|----------:|----------:|-------:|----------:| Blake2bRFC | 61EB59036B | 26.367 us | 0.1776 us | 0.1661 us | - | 32 B | Blake2Sharp | 61EB59036B | 5.652 us | 0.0292 us | 0.0259 us | 0.1984 | 864 B | Blake2Core | 61EB59036B | 26.023 us | 0.1694 us | 0.1584 us | 0.1831 | 864 B |

10MiB input

Method | Hash | Mean | Error | StdDev | Allocated | ------------ |----------- |---------:|----------:|----------:|----------:| Blake2bRFC | 7B6AB409B7 | 83.79 ms | 0.4101 ms | 0.3636 ms | 0 B | Blake2Sharp | 7B6AB409B7 | 16.58 ms | 0.1105 ms | 0.1033 ms | 864 B | Blake2Core | 7B6AB409B7 | 78.03 ms | 0.3949 ms | 0.3694 ms | 864 B |

Without JIT optimization, this library is almost as slow as the RFC version. The only place it has an advantage is that it doesn’t do all the byte shuffling to ensure the words are in little-endian order as required by BLAKE2. The RFC code does that shuffling whether it’s needed or not. The Blake2Sharp code copies the data without shuffling if it’s already ordered correctly, and that savings shows up in the 10MiB run.

By the way, BenchmarkDotNet has a validator that detects this problem and actually refuses to run benchmarks unless you override it. I had to do that for this run so we could see the impact.

Ultimately, this one counts as another performance trap, so don’t use it unless it gets an update.

[Once again, I’ll detail a better BLAKE2b implementation in the second part of this post]

This library also uses a .NET Standard 1.6 build target, so it can’t be used with older versions of .NET Framework (including 4.6). There’s no reason it wouldn’t be compatible; it’s just not multi-targeted.

The Weird

I’m honestly not sure what to make of Isopoh.Cryptography.Blake2b

The hashing implementation itself is taken straight from the Blake2Sharp reference. This library, however, adds a feature that uses a ‘SecureArray’ during the hashing. From what I understand, the SecureArray uses PInvoke to request that the OS lock access to memory during hashing, and then it securely zeroes that memory before returning. This is not without overhead, however, as the benchmarks show.

3-byte input

Method | Hash | Mean | Error | StdDev | Gen 0 | Gen 1 | Gen 2 | Allocated |

------------ |----------- |---------------:|---------------:|-----------------:|----------:|----------:|----------:|------------:|

Blake2bRFC | 44229FC0EF | 1,142.3 ns | 7.136 ns | 6.326 ns | 0.0057 | - | - | 32 B |

Blake2Sharp | 44229FC0EF | 534.7 ns | 4.650 ns | 4.349 ns | 0.2050 | - | - | 864 B |

Isopoh | 44229FC0EF | 9,187,594.5 ns | 386,206.608 ns | 1,114,294.368 ns | 2332.5195 | 2314.4531 | 2314.4531 | 710953144 B |

3.19KiB input

Method | Hash | Mean | Error | StdDev | Gen 0 | Gen 1 | Gen 2 | Allocated |

------------ |----------- |-------------:|------------:|--------------:|----------:|----------:|----------:|------------:|

Blake2bRFC | 61EB59036B | 26.880 us | 0.1841 us | 0.1722 us | - | - | - | 32 B |

Blake2Sharp | 61EB59036B | 5.629 us | 0.0273 us | 0.0256 us | 0.1984 | - | - | 864 B |

Isopoh | 61EB59036B | 8,094.502 us | 727.7956 us | 2,134.4986 us | 1724.1211 | 1710.4492 | 1710.4492 | 524302827 B |

10MiB input

Method | Hash | Mean | Error | StdDev | Allocated |

------------ |----------- |---------:|----------:|----------:|----------:|

Blake2bRFC | 7B6AB409B7 | 82.77 ms | 0.4741 ms | 0.4202 ms | 0 B |

Blake2Sharp | 7B6AB409B7 | 16.63 ms | 0.1210 ms | 0.1132 ms | 864 B |

Isopoh | 7B6AB409B7 | 16.67 ms | 0.1183 ms | 0.1106 ms | 984 B |

I can’t tell whether the ridiculous amount of memory allocated is a bug or by design. It’s very odd that it’s highest with the smallest input. And I can’t tell whether the lack of extra allocation on the 10MiB input is because it simply skips the extra processing past a certain size threshold or because the memory use is related to partially-filled blocks.

Although it would be accurate to say it’s more than 17000x slower than Blake2Sharp with small inputs, it might be more fair to say it has a high fixed overhead. Either way, it’s not suitable for general-purpose hashing. But unlike the libraries I’ve reviewed so far, this one doesn’t necessarily claim to be. I’m not sure of the value of securing the hash state memory when both the key and message data have been passed around unsecurely before the library has a chance to use them, but I might be missing something.

I’d recommend you stay away from this library unless you truly need whatever specialized benefit it offers and have good explanations for the issues I pointed out above.

The Others

I have to give an honorable mention to NSec.Cryptography

This library is based on libsodium, which is a relatively mature platform-native security library. It didn’t meet my criteria in that in explicitly disallows hashes less than 32 bytes and is, therefore, not RFC-compliant. I couldn’t tell whether this was a limitation of libsodium or of its .NET wrapper. I also didn’t see a way to do a keyed hash, but I might have just missed it. I can say that for general-purpose hashing, if you don’t need to use a key and can use a full-length digest, this library works and is slightly faster than the best I could do with managed code. In fact, the only thing I found that’s faster is an AVX2 version of the BLAKE2 reference code. I’ll be doing a port of that AVX2 version once support is available (should be coming in .NET Core 2.2) so check back for that later.

And finally, there’s Multiformats.Hash

This one lists BLAKE2 among its algorithms, but to quote from its readme:

“This is not a general purpose hashing library, but a library to encode/decode Multihashes which is a "container" describing what hash algorithm the digest is calculated with. The library also support calculating the digest, but that is not it's main purpose. If you're looking for a library that supports many algorithms and only want the raw digest, try BouncyCastle or the built-ins of the .net framework.”

Enough said there. It may or may not be any good at what it does, but it definitely does not do what I need it to do.

The Conclusion

Obviously, this was a very small sample size from the 115k+ packages on NuGet today and may not be representative of packages of all types. But the lesson is clear: there are no quality checks on NuGet, and download count is absolutely no indication of quality. In fact, download count tends to be self-reinforcing. People gravitate toward the “popular” packages, making it even more dangerous when one of these has a serious bug or design flaw. Not to mention, nuget.org seems to sort by popularity.

It’s dangerous to bring a library into your project without proper testing, and the presence of unit tests in a project or a lack of open issues are no guarantee that the code isn’t broken. As I like to say, “bad coders code bad tests that test their bad code badly”. Always test for yourself.

Tune in next time for some details on my own improved BLAKE2 implementations using the new X86 Intrinsics in .NET Core 2.1. Until then, sleep tight, and don’t let the bedbugs bite…